Google algorithms vs Google penalties, explained by an ex-Googler

This former Google quality analyst explains the difference between Google’s algorithms, manual actions, quality and penalties.

I mean, we’ve all experienced the power that this little input box yields, especially when it stops working. Alone, it has the power to bring the world to a standstill. But one doesn’t have to go through a Google outage to experience the power that this tiny little input field exerts over the web and, ultimately, our lives — if you run a website, and you’ve made your way up in search rankings, you likely know what I’m talking about.

It doesn’t come as a surprise, the fact that everyone with a web presence, usually holds their breath whenever Google decides to push changes into its organic search results. Being mostly a software engineering company, Google aims to solve all of its problems at scale. And, let’s be honest… It’s practically impossible to solve the issues Google needs to solve solely with human intervention.

Disclaimer: What follows derives from my knowledge and understanding of Google during my tenure between 2006 and 2011. Assume things might change at a reasonably fast pace, and that my perception may, at this stage, be outdated.

Google quality algorithms

In layman’s terms, algorithms are like recipes — a step-by-step set of instructions in a particular order that aim to complete a specific task or solve a problem.

The likelihood for an algorithm to produce the expected result, is indirectly proportional to the complexity of the task it needs to complete. So, more often than not, it’s better to have multiple (small) algorithms that solve a (big) complex problem—breaking it down into simple sub-tasks—, rather than a giant single algorithm that tries to cover all possibilities.

As long as there’s an input, an algorithm will work tirelessly, outputting what it was programmed to do. The scale at which it operates, depends only on available resources, like storage, processing power, memory, etc.

These are quality algorithms, which are often not part of infrastructure. There are infrastructure algorithms too, that make decisions on how content is crawled and gets stored, for example. Most search engines apply quality algorithms only at the moment of serving search results. Meaning, results are only assessed qualitatively, upon serving.

Within Google, quality algorithms are seen as ‘filters’ that aim at resurfacing good content and look for quality signals all over Google’s index. These signals are often sourced at the page level for all websites. Which can then be combined, producing scores for directory levels, or hostname level, for example. For website owners, SEOs and Digital Marketers, in many cases, the influence of algorithms can be perceived as ‘penalties’, especially when a website doesn’t fully meet all the quality criteria, and Google’s algorithms decide to reward other higher quality websites instead. In most of these cases, what the common users sees is a decline in organic performance. Not necessarily because your website was pushed down, but most likely because it stopped being unfairly scored—which can either be good or bad. In order to understand how these quality algorithms work, we need to understand first what is quality.

Quality and your website

Quality is in the eye of the beholder. This means, quality is a relative measurement within the universe we live in. It depends on our knowledge, experiences and surroundings. What is quality for one person, is likely different from what every other person deems as quality. We can’t tie quality to a simple binary process devoid of context. For example, if I’m in the desert dying of thirst, do I care if a bottle of water has sand at the bottom?

For websites, that’s no different. Quality is, basically, Performance over Expectation. Or, in marketing terms, Value Proposition.

But wait… If quality is relative, how does Google dictate what is quality and what is not?

Actually, Google does not dictate what is and what is not quality. All the algorithms and documentation that Google uses for its Webmaster Guidelines, are based on real user feedback and data. When users perform searches and interact with websites on Google’s index, Google analyses its users behavior and often runs multiple recurrent tests, in order to make sure it is aligned with their intents and needs. This makes sure that when Google issues guidelines for websites, they align with what Google’s users want. Not necessarily what Google unilaterally wants.

This is why Google often states that algorithms are made to chase users. So, if you chase users instead of algorithms, you’ll be on par with where Google is heading.

With that said, in order to understand and maximize the potential for a website to stand out, we should look at our websites from two different perspectives. Being the first a ‘Service’ perspective, and the second, a ‘Product’ perspective.

Your website as a service

When we look at a website from a Service perspective, we should analyse all the technical aspects involved, from code to infrastructure. Like, how it’s engineered to work; how technically robust and consistent it is; how it handles the process of talking to other servers and services; all the integrations and front-end rendering. Among many other things.

But, alone, all the technical bells and whistles don’t create value where and if value does not exist. They add to value, and make any hidden value shine at its best. And that’s why one should work on the technical details, but also consider looking at their website from a Product perspective.

Your website as a product

When we look at a website from a Product perspective, we should aim to understand the experience users have on it. And, ultimately, what value are we providing in order to stand out from the competition.

To make this less ethereal and more tangible, I often ask the question “If your site disappeared today from the web, what would your users miss, that they wouldn’t find in any of your competitor’s websites?”—I believe this is one of the most important questions to answer if you want to aim at building a sustainable and long lasting business strategy on the web.

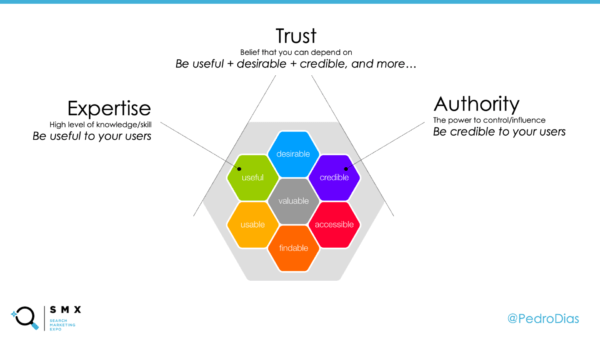

To further help with this, I invite everyone to look at Peter Morville’s User Experience Honeycomb. I made a few adjustments, so we can relate to the more limited concept of E.A.T which is part of Google’s Quality Raters Guidelines.

Most SEO professionals look deeply into the technical aspects of UX like Accessibility, Usability and Findability (which is, in fact, SEO).

But tend to leave out the qualitative (more strategical) aspects, like Usefulness, Desirability and Credibility.

In case you missed it, the central honeycomb stands for “Value”, which can only be fully achieved when all other surrounding factors are met. So, applying this to your web presence means that, unless you look at the whole holistic experience, you will miss the core objective of your website. Create value! For you and your users.

Quality is not static

In order to be perceived as “of quality”, a website must provide value, solve a problem or a need. The reason behind Google constantly testing, pushing quality updates and algorithm improvements, is simply because quality is actually a moving target!

If you launch your website, and never improve it, over time your competitors will ultimately catch up with you, either by improving their website’s technology, or working on the experience and value proposition. Much like old technology gets obsolete and deprecated, in time, innovative experiences also tend to become ordinary, and most likely fail to go beyond expectations. For example, back in 2007 Apple conquered the smartphone market with a touch screen device. Nowadays, most people will not even consider a phone that doesn’t have a touchscreen. It has become a given, and can’t really be used as a competitive advantage anymore.

Just like SEO is not a one time action—I once optimize, therefore I’m permanently optimized, any area that sustains a business must improve and innovate over time, in order to remain competitive.

When all this is left to chance, or not given the attention it deserves in order to make sure all these characteristics are understood by users, it’s when websites start to run into organic performance issues.

Manual actions to complement algorithms

It would be naive to assume that algorithms are perfect, and do all what they’re supposed to do flawlessly. The great advantage of humans in the ‘war’ of humans VS machines is that we can deal with the unexpected. Humans have the ability to adapt and understand outlier situations; to understand why something can be good although it might appear bad, or vice versa. And that’s because humans can infer context and intention, whereas machines aren’t that good at it.

In software engineering, when an algorithm catches or misses what it wasn’t supposed to, is often referred to as ‘false positives’ or ‘false negatives’ respectively. In order to apply corrections to algorithms, we need to identify the output of false positives or false negatives—a task that’s often best carried out by humans. So, often, engineers set a level of confidence (thresholds) that the machine should consider before prompting for human intervention.

What triggers a manual action?

Within Search Quality, there are teams of humans that evaluate results and look at websites in order to make sure the algorithms are working correctly. But also to intervene when the machine makes mistakes, or can’t make a decision. Enter the Search Quality Analyst.

The role of a Search Quality Analyst is to understand what they’re dealing with, by looking at the provided data, and make judgement calls. These judgement calls can be simple, but are often supervised and approved or rejected by other Analysts globally, in order to minimize human bias. This often results in static actions that aim at (but not only):

- Creating a set of data that can later be used to train algorithms;

- Address specific and impactful situations, where algorithms failed;

- Signal website owners that specific behaviors fall outside the quality guidelines.

These static actions are often referred to as manual actions.

Manual actions can be triggered for a wide variety of reasons. But the most common aim to counteract manipulative intent, that for some reason managed to successfully exploit a flaw in the quality algorithms.

The downside of manual actions, as mentioned, is that they are static, and not dynamic like algorithms. So, while algorithms work continuously and react to changes on websites, depending only on recrawl, or algorithm refinement. With Manual Actions the effect will remain for as long as it was set to last (days/months/years), or until a reconsideration request is received and successfully processed.

Google algorithms vs manual actions in a nutshell

Here’s a brief comparison between algorithms and manual actions:

| Algorithms – Aim at resurfacing value – Work at scale -Dynamic – Fully automated – Undefined duration |

Manual Actions – Aim at penalizing behavior – Tackle specific scenarios – StaticManual + Semi-automated – Defined duration (expiration date) |

Before applying any Manual Action, a Search Quality Analyst must consider what they are tackling, assess the impact and desired outcome. Some of the questions that often must be answered are:

- Does the behavior have manipulative intent?

- Is the behavior egregious enough?

- Will the Manual Action yield impact?

- What changes will the impact yield?

- What am I penalizing (widespread or single behavior)?

These are aspects that need to be properly weighted and considered before any manual actions is even considered.

How should website owners deal with manual actions

As Google moves more and more towards algorithmic solutions, taking advantage of artificial intelligence and machine learning to both improve results and fight spam, manual actions will tend to fade away and ultimately will completely disappear in the long run.

If your website has been hit with a manual actions, the first thing you need to do is understand what behavior triggered it. That usually means that, first and foremost, you should have a comprehensive understanding of Google’s Technical and Quality Guidelines, and evaluate your website against them.

It’s easy to let yourself get caught up juggling all the steps and pieces of information at the same time. But, trust me, this is not the time to let the sense of rush, stress and anxiety take the lead. You want to be thorough, rather than fast.

Also, you want to keep to a minimum the amount of times you submit a reconsideration request. Don’t act like you are playing trial and error. Just gather all the information, do a clean sweep on your website, and fix everything. Then, and only then, submit a reconsideration request.

Recovering from manual actions

There’s the misconception that, if you are hit with a manual actions and you lose traffic and rankings, you will return to the same level once the manual actions has been revoked. This couldn’t be further from the truth. You see, a manual actions aims to suppress unfair leverage. So, it wouldn’t make sense to return to the same organic performance after a cleanup and the manual action lifted, otherwise that would likely mean you weren’t benefiting from whatever was infringing on the Quality Guidelines.

Any website can recover from almost any scenario. The cases where a property is deemed unrecoverable, are extremely rare. Nevertheless, you should have a full understanding of what you are dealing with. Bear in mind that manual actions and algorithmic Issues can coexist. And, sometimes, you won’t start to see anything before you prioritize and solve all the issues in the right order. So, if you believe your website has been impacted negatively in search, make sure that you start by looking at the Manual Actions view in Search Console, and then work your way from there.

Unfortunately, there’s no easy way to explain what to look for and symptoms of each and every algorithmic issue. Unless you have seen and experienced many of them, algorithmic issues can throw you off as they not only stack, but sometimes have different timings and thresholds to hit before they go away. The best advice I can give is: think about your value proposition, problem that you are solving or need that you are catering. And don’t forget about asking feedback from your users. Ask their opinions on your business and experience you provide on your website, or how they expect you to improve. When you ask the right questions, the results can be extremely rewarding

Takeaways

Rethink your value proposition and competitive advantage: We are not in .com boom anymore. Having a website is not a competitive advantage on its own.

Treat your website as a product and innovate constantly: If you don’t push ahead, you’ll be run over. Successful websites constantly improve and iterate.

Research your users’ needs, through User Experience: The priority should be your users first, Google second. If you’re doing it any other way, you’re likely missing out. Talk to your users and ask their opinions.

Technical SEO matters, but alone will not solve anything: If your product/content doesn’t have appeal or value, it doesn’t matter how technical sound and optimized it is. Make sure you don’t miss on the value proposition.